Securing AI in the Enterprise

Trends and Insights on Adoption, Implementation, and Posture

The rapid advances in AI/ML in the recent past, most notably with the arrival of highly-scaled generative AI approaches like transformers, has captured the attention of consumers and enterprises alike. In turn, we have seen sharply-increased focus in the public and private markets on how companies are incorporating AI into their business strategy — a dizzying array of new startups at the model, infrastructure, and application layer are rising to meet the ever-growing number of AI task forces and strategic initiatives being launched by enterprises of all sizes.

As long-time investors in both artificial intelligence and cybersecurity as core thesis priorities, we at Acrew Capital wanted to take this moment to better understand which business use case and security posture considerations enterprises were using to make technology strategy decisions in this new AI-first innovation cycle. To support our thinking, we ran a survey with several dozen enterprise business leaders and senior technical practitioners/executives in our network to get a closer look at how their organizations were navigating these choices, and what that might imply about where there is the most or least opportunity for startups. We’re sharing our findings below; if you’re currently building in an adjacent category and/or working on products that facilitate scaled, compliant enterprise AI adoption, we’d love to meet you.

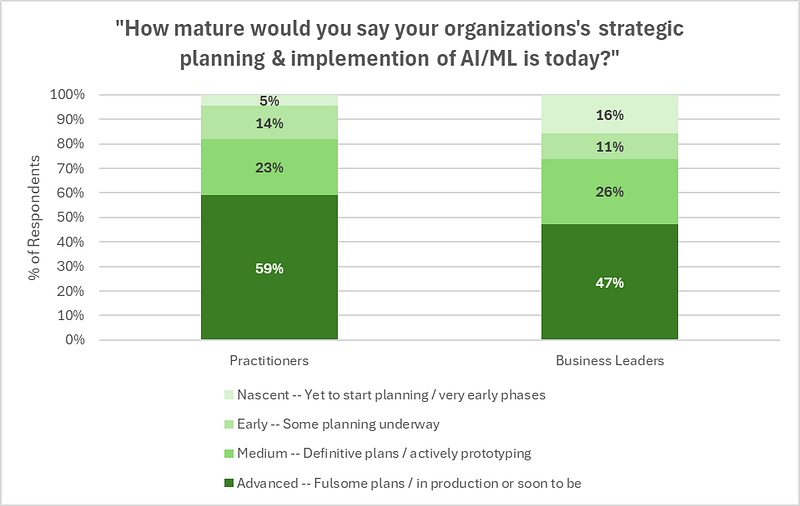

1. Enterprises are actively planning and prototyping with AI

When asked, both subsets of respondents — business leaders (e.g. CEOs, COOs, CROs, CMOs) and technical practitioners (e.g. CTOs, CISOs, CIOs, CDOs) — on average confirmed that the planning & implementation of AI features is well underway at their organizations. This is broadly in line with other recent studies of enterprise AI adoption (e.g. IBM’s), though our bifurcation of respondents also highlights how business leaders generally viewed their organization’s AI strategy/implementation as less mature than practitioners.

Acrew POV: AI’s new strategic resonance has catalyzed greater awareness re: it’s longtime operational relevance.

In our view, the awareness gap between business leader and technical practitioner respondents reflects the broader pattern we’ve seen as long-time investors in AI/ML, enterprise leadership is frequently unaware the extent that AI (both discriminative and generative) is already powering their products. For example, one of the most consistent “wow” moments for customers of Acrew portfolio company Protect AI is showing the full depth, breadth, and context of AI models in a company’s environment via single pane of glass with their Radar product.

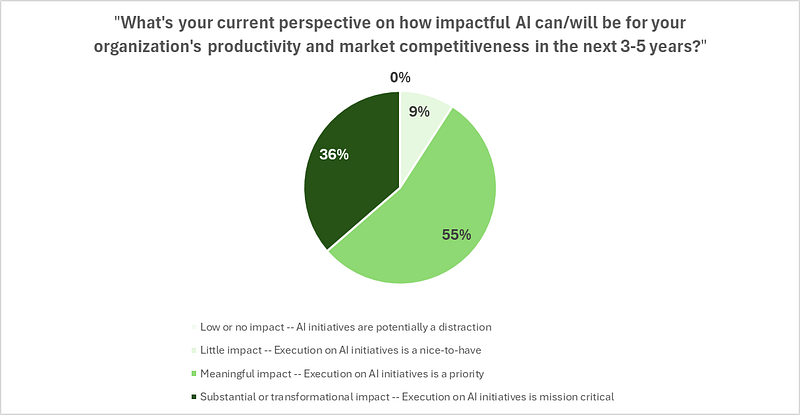

2. Enterprises are near-universally bullish on long-run impact and criticality of implementing AI

In line with front-footed approach survey respondents are to strategy & planning, enterprise leaders reported being very optimistic on the whole re: the potential of AI to positively impact their businesses — over 90% of respondents predicted that the AI initiatives would be meaningful or even transformational to their business over the coming 3–5 years, confirming that the technology is a strong priority if not mission critical to their organization’s continued productivity improvements and market competitiveness.

Acrew POV: startups will lead the way in enabling enterprise AI use cases.

Just as in past innovation cycles, our team is particularly excited about the key role startups will play in catalyzing the coming transformations that advanced AI systems will bring to both the infrastructure and application layers of modern corporate enterprises.

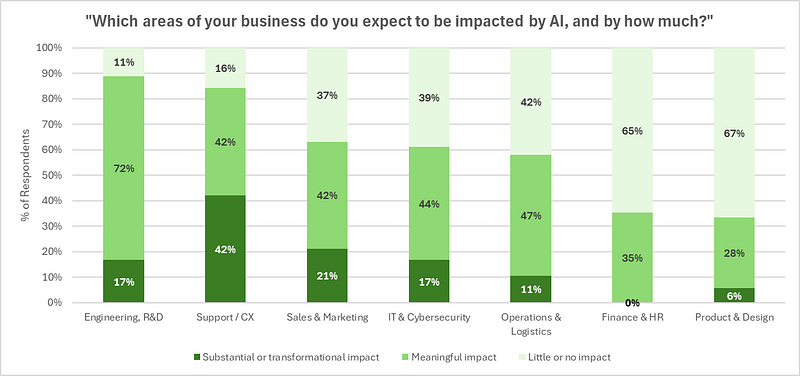

3. Enterprises see the highest impact potential for AI systems in engineering and support

In forecasting the potential for business value across function areas, engineering and customer support were far and above the two categories predicted to be most impacted. The responses broadly reflect the use cases where generative AI systems have already demonstrated production-ready value propositions — AI coding assistant/”autocompletes” like Github Copilot are already widely cited by many engineers to increased productivity by 10–20%+, facilitating but not quite transforming in accordance to survey respondents’ POV (although multiple startups are chasing the greater prize as well, e.g Devin, Magic, poolside.) Alternatively, customer support was perceived by respondents as far more likely to be wholly changed if not outright automated, which is reflected well by the number of startups offering to “sell work” in the function and not just software — e.g. Maven, Sierra, Decagon — allowing human agents where needed to solely focus on high-complexity requests. At the other end, respondents report only modest impacts on functions like finance, product management, and design.

Acrew POV: the value unlock of AI systems to function areas like finance and product is underrecognized.

Time will ultimately tell, but our perspective is that these findings underplay the long-run value of generative AI in several categories, and could be more a signal of recency bias vs. a lack of opportunity. Our fintech thesis team has been very close to this subject for sometime now (with their own piece on the potential of AI x financial services forthcoming); they continually meet with compelling teams pioneering new AI-powered use cases across core tasks like financial data management (eg Safebooks AI, Rogo Data), claims automation & underwriting (eg Stream Claims, Sixfold, Roots Automation), and accounting/audit (eg Puzzle, Numeric, and Stacks)). Similarly, we’ve seen a groundswell of innovation to the core workflows that make up product management & design, from productivity to facilitate improved documentation & knowledge share (eg Scribe, Augment, Writer), to automated design generation and UX testing (eg Tempo Labs, FlutterFlow). We’re bullish on capacity for AI-native workflows to reimagine knowledge work as we know it, and predict that enterprise buyers/wallet will be significantly more attuned to the cross-category potential of the technology in the next 12–24 months, particularly as base model generalization, reasoning, and agentic capabilities continue to improve.

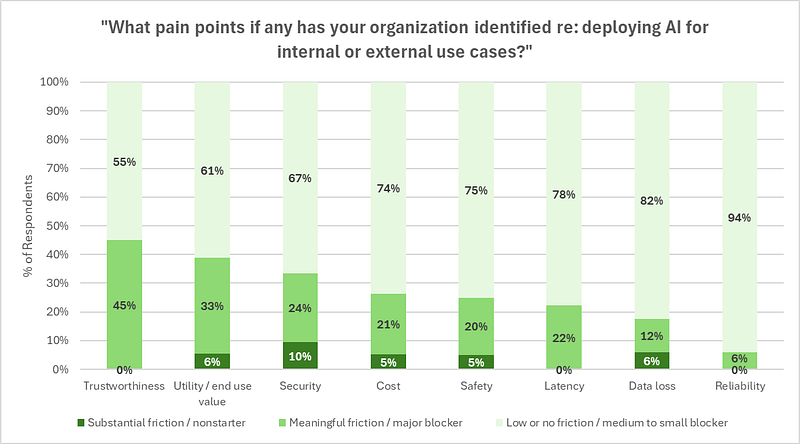

4. Despite the enthusiasm, enterprises still face significant pain points re: adopting generative AI in particular

Enterprises are keenly aware of the technological and economic problems of implementing genAI into their production applications. When asked about the biggest pain points to adoption, respondents pointed most often to the well-documented challenges re: hallucinations / limited trustworthiness of outputs. Perhaps more consequentially, respondents called out a lack of utility and end user value as a key friction as well, highlighting that — despite the aforementioned enthusiasm — many enterprises are still in the midst of understanding where and/or how generative AI can improve their existing products and services, or enable the creation of new ones.

Acrew POV: these results signal that most generative AI solutions are still too early for mainstream enterprise adoption.

We’ve noticed this pattern before in emerging technologies — in the discovery phase, identifying utility is the top concern (and prototyping is the dominant method of implementation); when enterprise understanding of generative AI improves, we expect that managing security risks (like prompt injection or malicious code in models; currently the third rated friction) will rise to be the top concern as it typically is in other technology implementations where the path to value is more mature. We believe we’ll see significant strides here in the next 12–24 months as the buildout of AI infrastructure continues to soar, though the current picture reinforces that we’re still in the early phases of this innovation cycle.

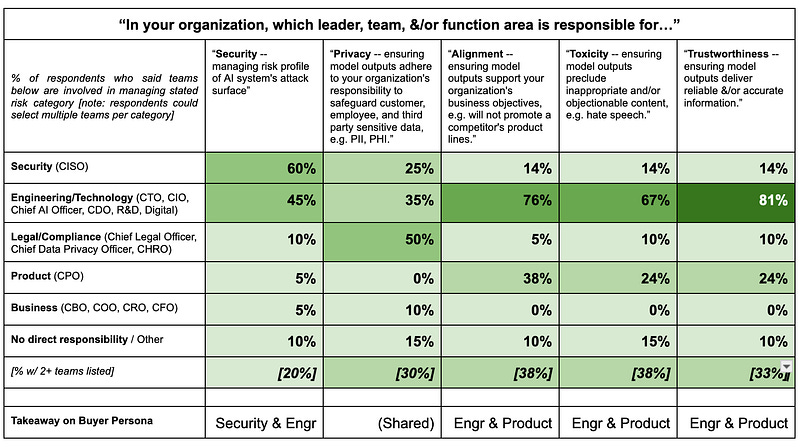

5. Ownership of key generative AI safety criteria is relatively diffuse today, spanning multiple enterprise function heads

Zeroing in on safety risks of generative AI systems in particular, we also asked respondents to tell us which teams were responsible for the various categories of safely & securely deploying genAI powered applications. While some answers adhered to expectations (eg CISOs were by and large reported to be responsible for managing genAI security/attack surface risk), our bigger takeaway was that the ownership of managing such enterprise genAI risks was typically diffuse across functions — respondents generally indicated that they rely on some combination of their CTO/engineering org and either Legal, Product, Security to navigate these issues. For most criteria we asked about, this dynamic equated to approx. a third of respondents indicating that decision-making responsibility was currently done by committee via multiple teams or functional heads.

Acrew POV: the buying centers for most categories of genAI safety tooling are not mature enough to support repeatable GTM motions for startup vendors.

We believe that the practical reality of how organizations are grappling with genAI safety issues today will have meaningful implications on the enterprise startups selling solutions in these categories in the nearterm, e.g. which stakeholders they must get onboard to land customers. Most notably, it appears that the purchasing dynamic for genAI privacy tooling may be the most fraught, as the Legal function does not have technical depth to remain the long-run buying center (we observed a similar dynamic re: tooling for GDPR et al compliance tooling.) We see this as another sign that enterprise interest still significantly outpaces enterprise-readiness re: safely deploying genAI use cases.

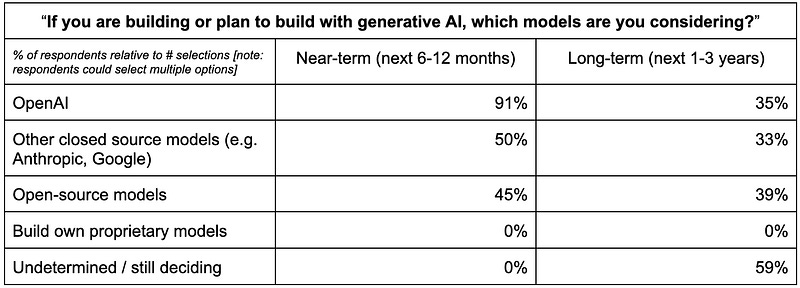

6. OpenAI leads the pack in early experimentation and prototyping, but enterprises are far less certain on their long-term model layer strategy

At the model layer of generative AI, responses aligned with the public headlines of OpenAI’s dominant mindshare / market share — over 90% of respondents indicated that they’re currently or planning to build with OpenAI models in the next 6–12 months. OpenAI is the clear first-mover for enterprise AI, and have continued to move fast on R&D and GTM to maintain both their performance edge and ease of implementation.

The perspective of enterprises on model preferences changes dramatically when asked to look several years out — the preference for OpenAI evaporates vs. other closed source model providers or building on top of open-source alternatives. More notable is the stark (albeit rational in our view) increase in uncertainty — nearly 60% of respondents told us they’re organizations are still hashing out their long-term model layer choices.

Acrew POV: enterprises are keen to maintain their optionality / avoid lock-in to OpenAI or any single model vendor at this time.

Taken together, the comparison between responses on near vs. long term preferences drives home three points:

The long game for enterprise model layer dominance is far from settled, and may yet be settled by factors unrelated to those that determined the early winners (e.g. as frontier model providers increasingly offer similar capabilities, we’ve heard more and more that enterprises are making purchasing decision based on their existing cloud provider relationships, further pushing the space toward the tri-polar axis of Azure-OpenAI, GCP-Gemini, and AWS-Anthropic.)

We’re still very much in the early innings of strategic planning for genAI in the enterprise. In addition, even the more forward-thinking enterprises we talked to are wisely taking a wait-and-see approach to buying preference at the model layer — the continued cost vs. performance improvements of the frontier models are likely to shape the conversation for the foreseeable future, and so it behooves enterprise leaders to take a flexible, nondogmatic view on how and where the generative AI models will ultimately fit into their products strategy

Lastly, we wanted to highlight that no respondents told us that they planned to build their own proprietary LLMs from scratch — given the exorbitant costs re: compute, technical personnel, and data to create a model of differentiated performance (and the nontrivial likelihood that generalized models will still outperform, eg. BloombergGPT vs. GPT4), we’re seeing most enterprises choose to at most finetune open-source models with their own data rather than build their own. This too can be interpreted as a strategic bent toward favoring flexibility at the model layer by minimizing capex spend.

By Theresia Gouw, Asad Khaliq, Mark Kraynak, Kwabena “KB” Nimo